|

Virtual Reality LaboratoryThe VR Lab of the A.I. group at Bielefeld University has been founded in 1995. The central research lines of the lab are intelligent computer graphics and human-machine interaction. |

Research Agenda

The research focus of this laboratory is on intelligent human-machine interaction in virtual worlds involving natural language, gesture, and facial expression. What are the roles played by emotion, attention in space, or knowledge of the dialog partner? How can they be captured in cognitive architectures? Technologies range from realtime processing of speech, gesture and gaze, over acoustic, visual and tactile stimulation to immersive interaction with virtual agents. System prototypes are developed that model cognitive performances and exploit them in technical applications.

History

Originating from research focuses on natural language processing, expert system techniques, and human machine communication, the AI Lab at Bielefeld found a new mission in 1995 when we started the AI and Computer Graphics Lab, centered around the VIENA and CODY projects which had both started in 1993. Our major motivation was that knowledge-based systems and further AI techniques can be used to establish an intuitive communication link between humans and highly interactive 3D graphics. Realizing that Virtual Reality proves a most comprehensive communication media and a multi-modal interface to a host of multimedia applications, it was a natural step to further extend the Lab's mission to Virtual Reality where we are collaborating with the Institute for Media Communication (IMK), now Fraunhofer IAIS, which is a part of the Fraunhofer Gesellschaft. Our focus is now to build Intelligent VR Interfaces that yield highly interactive construction and design environments by the support of knowledge-based techniques. The SGIM project - which extended our efforts to include multimodal speech and gesture interfaces.

After the success of several projects, the planning phase for a major lab reconstruction and upgrade started back in the year 2000. With the launch of the Virtuelle Werkstatt, the already installed one-sided projection systems wall and responsive workbench are now complemented and substituted by several new systems, e.g., a three-sideed large-screen projection system similar to the CAVE or the cyberstage systems called TRI-SPACE and a portable large screen VR-system named CYKLOOP.

Hardware

Projection Technology

- TRI-SPACE, an immersive VR-display system providing 3 stereoscopic screens using 6 digital JVC D-ILA projectors by 3Dims (see Figure 1)

- Interaction Space, three portrait HD panels by NEC

- CYKLOOP, a portable large screen VR-display with one monoscopic screen.

- HMD, a Head Mounted Display Z800 by eMagin

- 8 channel digital 3D audio system

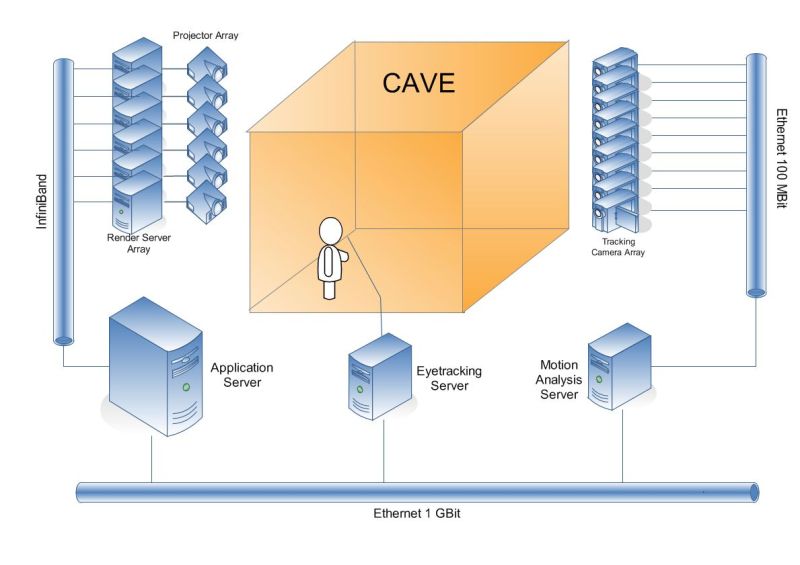

Holodeck Compute Cluster

- for interactive computer graphics and multimodal interaction

- Infiniband Network

- 7 nodes, 56 CPU cores, 14 GPUs

- Intel Xeon Dual Quad (8 Cores), 2.66 GHz, 8 GB RAM

- NVIDIA Quadro FX 5600

- NVIDIA Quadro FX 5600

- 6 nodes, 24 (+24 HT) CPU cores, 6 GPUs

- Intel Corei7 950, 3.0 GHz, 6 GB RAM

- NVIDIA GTX 580, 3 GB RAM

- 1 node, 4 CPU cores, 1 GPU, 6 channel render server in one node

- Intel Core2 Quad (x4), 2.66 GHz, 8 GB RAM

- 3x NVIDIA GeForce 8800 GTX

Interaction Technology

- An optical (cable-less) tracking system (see Figure 2) by ART

- Two optical (cable-less) 5 finger gloves by ART

- Two optical (cable-less) 3 finger gloves with tactile feedback (see Figure 3) by ART

- One eye tracker (see Figure 4), ViewPoint PC-60, BS007, from Arrington Research

Software

Some major software tools used in the lab:

- Modelling: Blender, 3D Studio MAX

- Visualization & VR:

- InstantReality

- OpenSG

- AVANGO with the Chromium library for OpenGL distribution, SGI Performer

- previous tools were Open Inventor 2.1, VRML,

VIENA-, CODY-, SGIM-project viewer (proprietary)

- Communication: WAS - WBS Agent System

- Sound: FMOD by Firelight Technologies Pty, Ltd.

- Further tools: ECLIPSE, CLIPS, Elk Scheme, Vanilla Sound Server (VSS) of the NCSA

Projects

See projects page.Contact

Ipke Wachsmuth (E-Mail: ipke.wachsmuth@uni-bielefeld.de) or

Thies Pfeiffer (E-Mail: tpfeiffe@techfak.uni-bielefeld.de)

Thies Pfeiffer, 2011-11-30 Ipke Wachsmuth, 2017-10-12